Modeling data entails creating abstract representations of data relationships and processes in the form of charts, symbols, and words. Engineers utilize these concepts when creating new software or improving older programs. Modeling data also checks for errors and improves data quality. Database schemas and data models are not the same thing. The data model is an underlying architecture that dictates what kinds of data can exist in a database, whereas a schema is a blueprint for a database.

Table of Contents

- What is a Data modeller?

- Data Modeling and Snowflakes

- Advantages of data modelling

- Approaches Types

- Types of data models

- Role of a modeller

- Comparison of Data Modeling and Database Design

- Cloud Data modelling and Snowflake data

- Why is Data Modeling crucial?

- Data Lifecycle Changes requirements

- What is the Single Most Crucial Factor when Modeling Data?

- What is the Data Vault?

- Methods for Data Modeling in Contemporary Cloud-Based Databases

- Conclusion

What is a Data modeller?

Data modelers take high-level ideas for software systems and convert them into more digestible visual representations by means of symbols as well as textual descriptions of appropriate data flows. To guarantee an accurate mapping of all data flows as well as activities, data modelers will often construct numerous models using the same data. If successful, the completed model can serve as a guide for creating brand-new software or for re-engineering and improving current applications and programs. Many SQL data modelers exist to make data modeling in SQL databases easier.

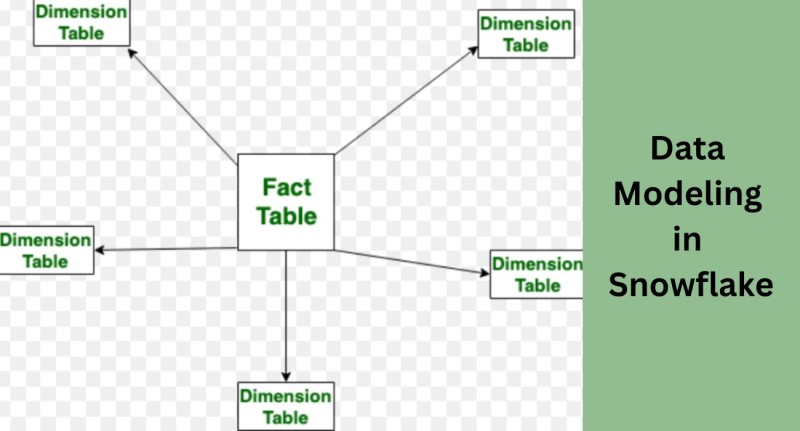

Data Modeling and Snowflakes

Customers can use any number of popular SQL modeling tools with the Snowflake Cloud Data Platform because it is ANSI SQL compatible. In addition, Snowflake has unveiled VARIANT, an innovative data type for semi-structured data storage (AVRO, JSON, XML, as well as others). Developers have an easier time re-engineering and reconstructing Snowflake databases thanks to Oracle SQL Developer Modeler (SDDM).

Advantages of data modelling

- Enhanced data precision, uniformity, consistency, as well as predictability Wider availability of useful insights

- Reduced time spent on development and improved efficiency in integrating data systems

- Updates to software and upkeep are quicker and cheaper.

- Improved speed in spotting mistakes and omissions

- Safer conditions

- More effective teamwork, including among non-programmers

- Data users must undergo accelerated Snowflake Training in Hyderabad as well as onboarding.

Approaches Types

There are four main types of thought when it comes to data modeling:

Hierarchy - A hierarchical database model stores information in interconnected entries with one-to-many relationships, creating a tree-like data structure. XML as well as GIS both typically employ hierarchical database architectures.

Relational - The relational data model, often known as a relational model, is a data management tool that facilitates the specification of data and queries. Data definition as well as query language for numerous relational data formats is SQL.

Connection between entities - The diagrammatic representation of data and its connections is important to entity-relationship frameworks. Entity-relationship models are graphical representations of relational data structures that help with comprehension.

Graph - Graph data models are representations of complicated interactions within data sets constrained by a specific domain.

Types of data models

There are essentially three distinct data models:

- First, on a conceptual level, we can organize, scope, as well as define business ideas and regulations by describing what a data system comprises.

- Second, Logical, outlining the implementation details of a data system to provide a road map regarding technical rules as well as data structures.

- Third, the data system's physical implementation is defined for a given use case.

Role of a modeller

Data modelers take high-level concepts for software systems and convert them into simple diagrams that clearly display data flows through the use of icons and descriptive language. To guarantee a thorough mapping of all data flows as well as activities, data modelers will frequently construct numerous models for the exact same data. Data architects and data modelers collaborate closely.

Comparison of Data Modeling and Database Design

By harmonizing with organizational needs, defining requirements for data, and designing to suit those requirements, data architecture establishes a road map for managing data assets.

Integration of new systems into a current system is an example of when database design along with data modeling are in sync. Data modeling enables a seamless integration of two systems through comparison of their data.

Cloud Data modelling and Snowflake data

Customers can use any of a wide variety of data tools learned in Snowflake training in Hyderabad for modeling designed for their individual needs as well as purposes because the Snowflake platform is ANSI SQL-compliant. For the purpose of storing semi-structured data (AVRO, JSON, XML, Parquet, and others), Snowflake has additionally developed the VARIANT data type. Developers also have an easier time re-engineering and reconstructing Snowflake databases thanks to Oracle SQL Developer Modeler (SDDM).

Why is Data Modeling crucial?

The numerous processes at all data-driven, modern organisations generate massive amounts of data. Data consolidation, cleansing of redundant information, and transformation into a form usable for analytics are all necessary due to the inherent diversity of corporate operations, systems, and procedures. To achieve this goal, it is required to engage in a Data Modeling activity to ensure which the data is consistently structured and stored in an easily consumable format.

An efficient data model also provides a stable basis for all Data Warehouse, allowing it to accommodate changes in data volumes and the addition or removal of data objects with relative ease.

Data Lifecycle Changes requirements

Different phases of the Data Lifecycle call for distinct approaches to Data Modeling. Data management needs change depending on the data's creation, storage, and retrieval processes. To be effective, a Data Model needs to take into account the circumstances of the Lifecycle phase in question.

What is the Single Most Crucial Factor when Modeling Data?

When deciding on a data model, it is important to take into account a number of factors. These factors change depending on the Data Lifecycle phase we're planning for. Here are some of these elements:

- Data development and alteration rates should be high, and it's important that even minor adjustments take place quickly and reliably on disk.

- Quickly obtaining data for reporting and analysis, be it a small or substantial amount.

- Transactions with ACID properties are those that are atomic, consistent, isolated, and durable.

- Several or all of a company's departments or operational facets.

- Access to the Finest Particles of Data - Depending on the context, it may be necessary to view data at the finest granularity possible.

Although the aforementioned considerations are not exhaustive, they do play a significant role in determining which data model to use.

What is the Data Vault?

Long-term archival storage of information from various operational systems is the goal of Data Vault, a database modeling approach. As an approach for analyzing past information, it emphasizes the importance of knowing where your data came from and addresses concerns like auditing, data tracing, loading time, and adaptability. In order for an auditor to determine where values came from, the data vault must store them alongside attributes such as record source as well as load date. Dan (Daniel) Linstedt created it in the year 2000.

Here is how he explains the Data Model:

The Data Vault Model is a set of tables with normalization with a focus on detail, history tracking, and unique linking to serve the needs of one or more business functions. It's a method that takes the best features of both 3rd normal form (3NF) as well as star schema and combines them into one. The layout is pliable, scalable, constant and modifiable to suit the requirements of any business.

Methods for Data Modeling in Contemporary Cloud-Based Databases

The limitations of conventional database systems in terms of scalability and performance inspired the development of the Snowflake system from the ground up. Customers of Snowflake don't need to speculate on capacity needs or invest in costly hardware. A contemporary warehouse can scale both in terms of performance (compute) as well as capacity (storage) independently thanks to the separation of compute and storage.

Snowflake also allows for the storage of semi-structured data through the use of data types such as VARIANT. The semi-structured data types like VARIANT can store data in its native format, like JSON, for contemporary sources of data like IoT devices, big data storage (Data Lakes), etc. Data Vault and Star Schema are two examples of data models that could benefit from using the data types for expedited ingestion. A Star Schema model's dimension table can accommodate a JSON document in its VARIANT column, for instance. Depending on the connections between the JSON attributes, this column may develop into several columns or distinct dimension tables.

In addition to the advantages of the Snowflake platform itself, a well-implemented data model can further these advantages by facilitating efficient data transformation and loading (ETL/ELT), cutting down on unnecessary duplication, and facilitating the efficient generation of Snowflake artifacts such as Compute Warehouses as well as databases.

Snowflake supports both the Data Vault and Star Schema Data Models, so users have a choice between the two depending on their needs. When the goal is to construct a Warehouse for storing historical data and guarantee consistent linking between data entities across multiple departments, a Data Vault model is an appropriate choice. Smaller projects with narrower analytic goals, which may necessitate the structuring of data at multiple levels of detail, are good candidates for the Star Schema model.

Conclusion

This blog is by no means comprehensive, but I hope it helps you along the way to selecting the best data model for the business you run. We'd love to talk with you further if you have any unanswered questions or require further assistance with the design, development, and implementation of your data product.

Author Bio:

Vinod Kasipuri is a seasoned expert in data analytics, holding a master's degree in the field. With a passion for sharing knowledge, he leverages his extensive expertise to craft enlightening articles. Vinod's insightful writings empower readers to delve into the world of data analytics, demystifying complex concepts and offering valuable insights. Through his articles, he invites users to embark on a journey of discovery, equipping them with the skills and knowledge to excel in the realm of data analysis. Reach Vinod at LinkedIn.